Efficient Research in Cybersecurity — How AI Tools Streamline CIS Hardening Analysis

Posted on June 27, 2025 • 9 min read • 1,717 words

If you’ve ever tried to navigate the depths of CIS benchmarks or other security hardening guides, you know how tedious and fragmented the research process can be. Finding up-to-date, actionable recommendations for each specific setting often means juggling between official documentation, community forums, and best-practice blogs. But there’s a game-changer: AI tools that make research, analysis, and documentation in cybersecurity dramatically more efficient and reliable.

Why Use AI-Powered Research in Cybersecurity?

The complexity and pace of cybersecurity are constantly increasing. Traditional research is time-consuming, error-prone, and often hard to reproduce. AI tools like Perplexity AI offer a significant boost: they aggregate relevant sources, summarize complex content, and deliver structured, actionable analyses. This not only saves time but also raises the quality and consistency of your work.

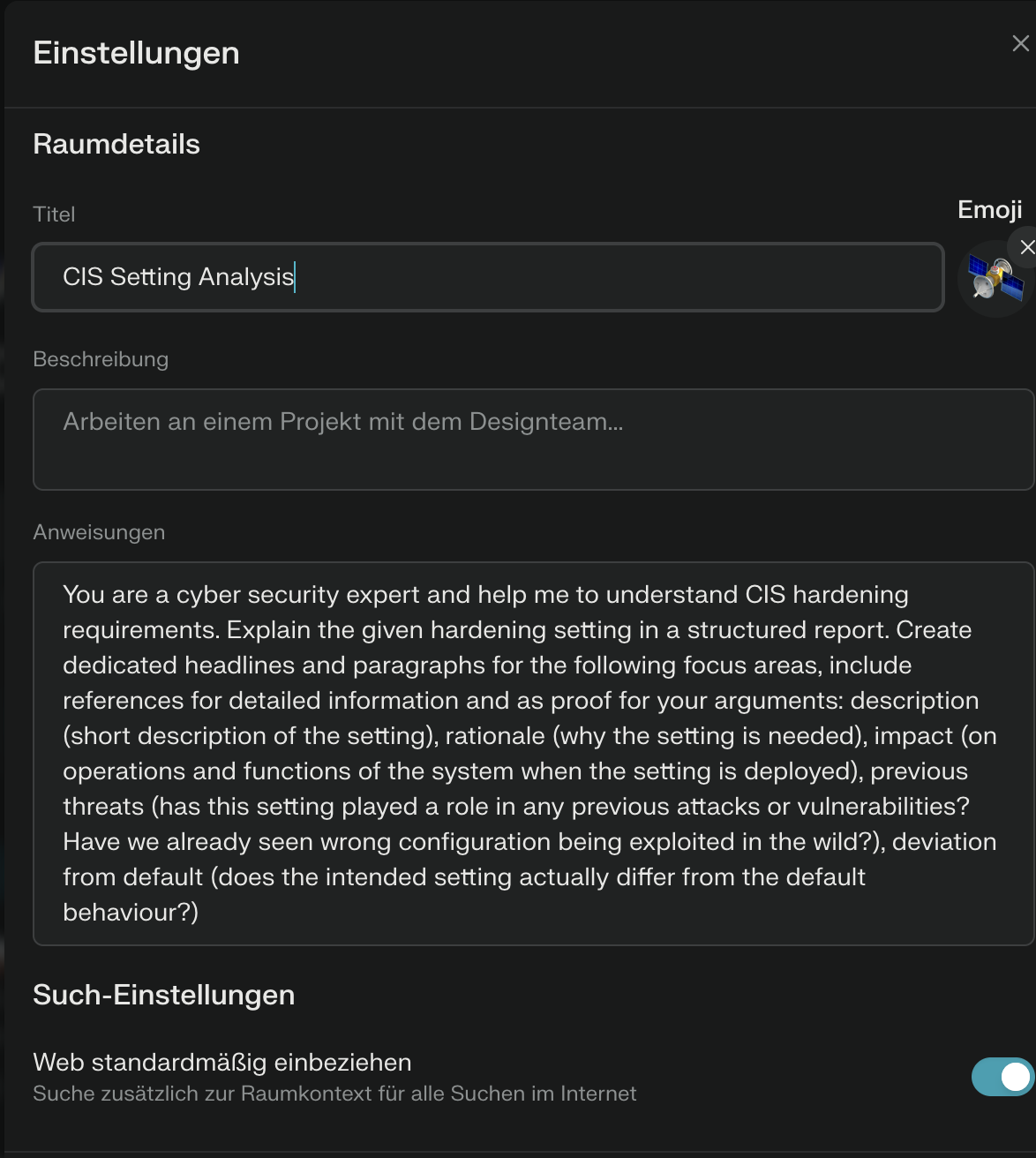

My Custom Setup: CIS Setting Analysis Space

To get the most out of AI, I created a dedicated “Space” for analyzing CIS hardening settings. This space is configured to deliver a structured report for any setting you want to review—covering description, rationale, impact, threat landscape, deviation from default, and references for further reading.

Here’s what the configuration looks like:

Prompt used in the Space:

You are a cyber security expert and help me to understand CIS hardening requirements. Explain the given hardening setting in a structured report. Create dedicated headlines and paragraphs for the following focus areas, include references for detailed information and as proof for your arguments: description (short description of the setting), rationale (why the setting is needed), impact (on operations and functions of the system when the setting is deployed), previous threats (has this setting played a role in any previous attacks or vulnerabilities? Have we already seen wrong configuration being exploited in the wild?), deviation from default (does the intended setting actually differ from the default behaviour?)

Example Output: “Configure Solicited Remote Assistance”

Here’s a real example generated by this setup, analyzing the CIS recommendation to disable Solicited Remote Assistance in Windows:

# CIS Setting Analysis: Configure Solicited Remote Assistance

This report provides an in-depth analysis of the CIS hardening requirement to disable Solicited Remote Assistance in Windows environments. This Level 1 control (18.9.35.2) is an essential security measure for organizations seeking to reduce their attack surface and prevent unauthorized access.

## Description

The 'Configure Solicited Remote Assistance' policy setting allows administrators to control whether users can request remote assistance from others. When enabled, users can initiate Remote Assistance requests through email, file transfer, or instant messaging applications, allowing external parties to view or control their computer. When disabled, the ability to request such remote help is completely turned off, preventing any solicited remote connections to the system[1][3][7].

This setting is specifically referenced in CIS benchmarks for Windows operating systems as a Level 1 recommendation, meaning it's considered fundamental for secure system configuration across all environments[1][2].

## Rationale

This security control is necessary because Remote Assistance creates a potential pathway for unauthorized access to system resources. Even though solicited assistance is explicitly requested by users, it introduces significant risks:

1. It allows external parties to view sensitive information on screen

2. When full control is granted, it permits complete manipulation of the system by remote helpers

3. It bypasses normal network access controls by opening direct connections[1][9]

The CIS benchmarks and STIG guidance designate this as a high-severity security issue, reflecting its importance in maintaining a secure computing environment[9]. By disabling this feature, organizations eliminate a vector that could be exploited by malicious actors posing as legitimate helpers.

## Impact

When this setting is disabled, users will experience the following operational impacts:

1. Users cannot use email or file transfer mechanisms to request remote technical assistance

2. Instant messaging applications cannot be used to establish remote viewing or control connections

3. Help desk and IT support teams must utilize alternative remote support tools that are corporate-approved and properly secured[1][3][7]

Despite these limitations, the security benefit outweighs the impact. According to source documentation, there is a "slight risk that a rogue administrator will gain access to another user's desktop session" when enabled, even though connections require explicit user permission[1]. By disabling the feature entirely, this risk is completely eliminated.

## Previous Threats

While the search results don't explicitly detail specific attacks exploiting this feature, the high severity rating assigned by security frameworks like STIG indicates it has been identified as a significant security concern based on risk analysis[9].

The capability to view and potentially control another user's system creates an opportunity for:

- Social engineering attacks where attackers convince users to grant remote access

- Exposure of sensitive data through screen sharing

- Potential system compromise if full control is granted to malicious actors

Security professionals recognize this as a concerning attack vector, which is why it's included in hardening guidelines despite the lack of widely publicized exploits specifically targeting this feature.

## Deviation from Default

Disabling Solicited Remote Assistance represents a clear deviation from the default Windows configuration:

1. In a default Windows installation, the feature is neither explicitly enabled nor disabled

2. Users can freely turn on or off Solicited Remote Assistance through System Properties in Control Panel

3. Users can configure specific Remote Assistance settings according to their preferences[3][7]

The CIS recommendation requires explicitly disabling this capability through Group Policy, removing user discretion and enforcing a more secure posture. This is accomplished by setting the registry value "fAllowToGetHelp" to "0" in the path "HKEY_LOCAL_MACHINE\Software\Policies\Microsoft\Windows NT\Terminal Services\"[7][9].

To implement this setting, administrators can configure the policy through Group Policy at: Computer Configuration > Administrative Templates > System > Remote Assistance > "Configure Solicited Remote Assistance" > Disabled[9].

## References

The requirement to disable Solicited Remote Assistance appears consistently across security frameworks, including CIS Benchmarks for Windows 11, Windows Server 2019, and Windows Server 2022, demonstrating its importance as a fundamental security control for Windows environments[1][2][8].

[1] https://www.syxsense.com/securityarticles/cis_benchmarks/syx-1033-12411.html

[2] https://rayasec.com/wp-content/uploads/CIS-Benchmark/Microsoft-Windows-Desktop/CIS_Microsoft_Windows_11_Enterprise_Benchmark_v4.0.0.pdf

[3] https://learn.microsoft.com/en-us/windows/client-management/mdm/policy-csp-remoteassistance

[4] https://github.com/wazuh/wazuh/blob/master/ruleset/sca/windows/cis_win2022.yml

[5] https://www.anoopcnair.com/configure-solicited-remote-assistance-intune/

[6] https://www.irs.gov/pub/safeguard/safeguard-microsoft-windows-11-scsem-v30-08122024.xlsx

[7] https://admx.help/?Category=Windows_10_2016&Policy=Microsoft.Policies.RemoteAssistance%3A%3ARA_Solicit

[8] https://rayasec.com/wp-content/uploads/CIS-Benchmark/Microsoft-Windows-Server/CIS_Microsoft_Windows_Server_2019_STIG_Benchmark_v3.0.0.pdf

[9] https://www.stigviewer.com/stig/windows_server_20122012_r2_member_server/2020-06-16/finding/V-3343

[10] https://learn.microsoft.com/en-us/answers/questions/2194375/remote-assistance?forum=windowserver-all

[11] https://www.tenable.com/audits/items/CIS_MS_Windows_Server_2003_MS_v3.1.0.audit:7be597d5c05752d65e2c56d2c0bac24a

[12] https://www.tenable.com/audits/items/CIS_MS_InTune_for_Windows_10_Level_1_v1.1.0.audit:fc1bf902c3bbeebd22c5c7fbb38e9f2e

[13] https://www.tenable.com/audits/items/CIS_Microsoft_Windows_10_Enterprise_v3.0.0_L1.audit:d5a532c710c1d0e1facab7617fbc01ae

[14] https://www.tenable.com/audits/CIS_Microsoft_Windows_11_Enterprise_v2.0.0_L1/changelog

[15] https://www.irs.gov/pub/safeguard/safeguard-microsoft-windows-10-scsem-v60-08122024.xlsx

[16] https://www.simp-project.com/docs/simp-enterprise/windows-cis-coverage.html

[17] https://www.coursehero.com/file/p49uormc/This-Group-Policy-section-is-provided-by-the-Group-Policy-template/

[18] https://github.com/scipag/HardeningKitty/blob/master/lists/finding_list_cis_microsoft_windows_server_2022_22h2_2.0.0_machine.csvHow to Set Up Your Own Research Space

Want to streamline your own research and documentation process? Here’s how to set up a similar space:

- Create an Account on Perplexity AI:

Use this promo link to save 50% for your first month. - Create a New Space:

Give your space a clear name, e.g., “CIS Setting Analysis.” - Define the Prompt:

Use a detailed instruction like the one shown above. Specify the structure and focus areas you want in each report. - Test and Refine:

Try out your prompt with different settings. Adjust as needed for clarity and completeness. - Document and Share:

Use the generated reports for your own documentation, compliance checks, or as a basis for team discussions.

Pro Tip: Spaces can be shared and used collaboratively, enabling consistent, high-quality analyses across your team.

Caution: Responsible Use of AI Tools in Cybersecurity Research

While AI-powered research spaces like the CIS Setting Analysis Space offer tremendous benefits for efficiency and insight, it’s essential to approach their use with informed caution. Here are several key considerations and best practices:

1. Human Oversight Remains Critical

AI tools can process and summarize vast amounts of information, but they are not infallible. Over-reliance on AI without expert review may lead to missed threats, misinterpretations, or a false sense of security. Cybersecurity experts must always validate AI-generated results, provide context, and make final decisions based on a holistic understanding of the environment.

2. Data Quality and Bias

The effectiveness of AI depends heavily on the quality and diversity of the data it’s trained on. Poor or biased data can result in inaccurate analyses, false positives, or overlooked vulnerabilities. Regularly update and audit your AI models, and ensure that input data is representative and up-to-date.

3. False Positives and Negatives

AI systems can generate both false alarms (flagging benign activity as malicious) and false negatives (missing real threats). These errors can be costly and disruptive if not carefully managed. Always combine AI-driven insights with manual review and established security processes.

4. Vulnerability to Adversarial Attacks

AI models themselves can be targeted by sophisticated attackers, who may attempt to manipulate inputs to evade detection or trigger incorrect responses. Stay aware of adversarial risks and monitor for signs of AI system manipulation.

5. Privacy, Compliance, and Ethics

AI tools may process sensitive or regulated data. Ensure your use of AI complies with data protection laws (such as GDPR or HIPAA) and follows ethical guidelines. Protect user privacy by anonymizing data where possible and maintaining transparency about how AI decisions are made.

6. Transparency and Explainability

Many AI systems operate as “black boxes,” making it difficult to understand how decisions are reached. This lack of transparency can reduce trust and complicate compliance. Favor tools and workflows that provide clear reasoning and references for their outputs, and be prepared to explain and justify AI-driven recommendations.

7. AI Is a Supplement, Not a Replacement

AI augments human expertise, but should not replace it. The best results come from integrating AI insights with your existing knowledge, processes, and professional judgment.

By recognizing these risks and implementing best practices, you can harness the strengths of AI in cybersecurity research while minimizing potential downsides. Always treat AI as a powerful assistant—one that requires oversight, context, and responsibility to deliver its full value.

Further Use Cases for AI Spaces in Cybersecurity

AI-powered spaces aren’t just useful for hardening analysis. Here are more ideas for cybersecurity professionals:

- Policy Reviews: Automated, structured reviews of security policies or firewall rules.

- Vulnerability Analysis: Rapid assessment and documentation of CVEs or zero-day vulnerabilities.

- Compliance Checks: Compare system configurations against regulatory requirements.

- Threat Intelligence: Summarize and contextualize current threat reports from multiple sources.

- Security Awareness Training: Generate learning materials and quizzes for team education.

Any scenario that requires structured research and clear documentation can benefit from this approach.

Conclusion & Try It Yourself

AI tools like

Perplexity AI make cybersecurity research and analysis faster, more reliable, and more actionable. My custom CIS Setting Analysis Space has dramatically improved both the speed and quality of my documentation and compliance work. Try it for yourself—use this promo link to get 50% off your first month:

perplexity.ai/pro?referral_code=MHW6RT12

How are you using AI tools in your security practice? Share your experiences and ideas in the comments!